需求:前端获取到摄像头信息,通过模型来进行判断人像是否在镜头中,镜头是否有被遮挡。

实现步骤:

1、通过video标签来展示摄像头中的内容

2、通过canvas来绘制视频中信息进行展示

3、在拍照时候将canvas的当前帧转成图片

第一步:下载引入必要包

下载依赖

face-api.js是核心依赖必须要下

npm install face-api.jselement-ui为了按钮好看一点(可以不下) ,axios用于请求发送

npm istall element-ui axios -Selement-ui根据官方文档进行引入使用

import Vue from 'vue';

import ElementUI from 'element-ui';

import 'element-ui/lib/theme-chalk/index.css';

import App from './App.vue';

Vue.use(ElementUI);

new Vue({

el: '#app',

render: h => h(App)

});下载model

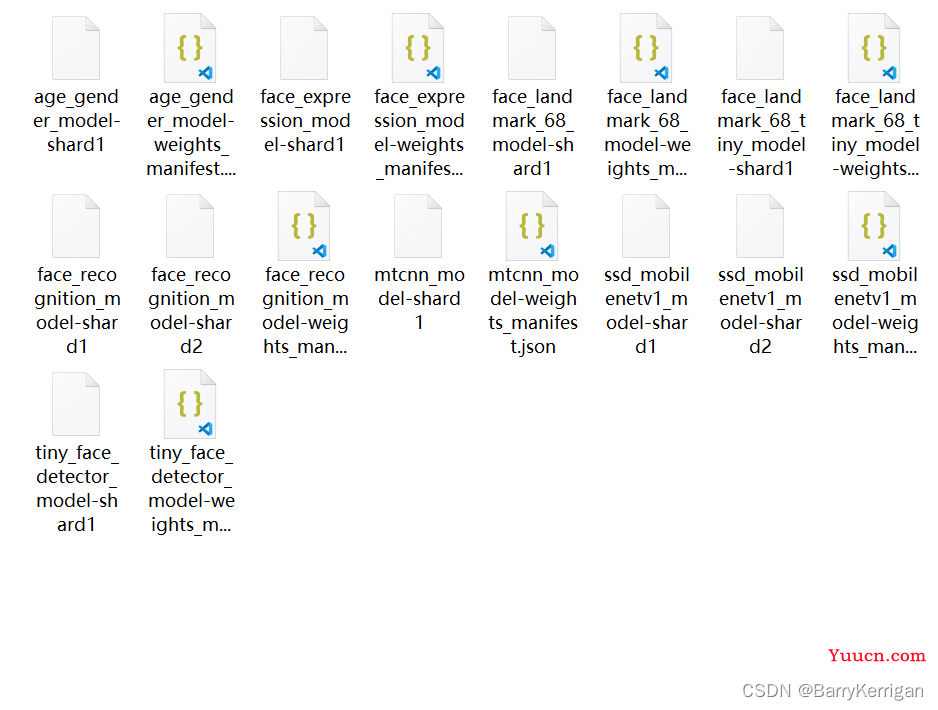

下载地址: 模板地址 如果访问出现异常请科学上网

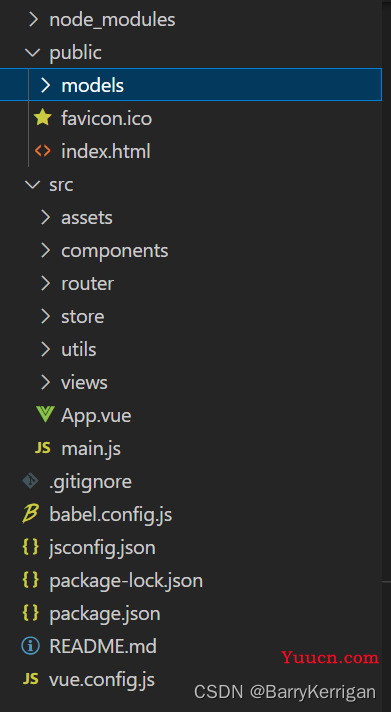

将项目中的model放入VUE中的public文件加下

第二步:先把HTML写上去

<template>

<div>

<el-button type="primary" @click="useCamera">打开摄像头</el-button>

<el-button type="plain" @click="photoShoot">拍照</el-button>

<el-alert

:title="httpsAlert"

type="info"

:closable="false"

v-show="httpsAlert !== ''">

</el-alert>

<div class="videoImage" ref="faceBox">

<video ref="video" style="display: none;"></video>

<canvas ref="canvas" width="400" height="400" v-show="videoShow"></canvas>

<img ref="image" :src="picture" alt="" v-show="pictureShow">

</div>

</div>

</template>第三步 可以开始代码了

首先引入下载好的face-api.js包

import * as faceApi from 'face-api.js'以下是需要用到的属性

1、视频和图片不同时出现

videoShow: false,

pictureShow: false,2、生成图片后用于保存图片路径

picture: '',3、因为在操作时会用到DOM所以将要用到虚拟DOM保存在data中

canvas: null,

video: null,

image: null,4、模型识别时直接传入此属性,在初始化时赋值(可省略,直接卸载逻辑代码中)

options: ''5、在人脸识别时对结果进行反馈(识别出人像数量大于1或小于1时给出提示)

noOne: '',

moreThanOne: '',6、如果用户不是在https下进行使用摄像头调用给出提示

httpsAlert: ''属性准备好之后就可以开始初始化了

1、初始化模型

2、获取需要用到的虚拟DOM

async init() {

await faceApi.nets.ssdMobilenetv1.loadFromUri("/models");

await faceApi.loadFaceLandmarkModel("/models");

this.options = new faceApi.SsdMobilenetv1Options({

minConfidence: 0.5, // 0.1 ~ 0.9

});

// 视频中识别使用的节点

this.video = this.$refs.video

this.canvas = this.$refs.canvas

this.image = this.$refs.image

}调用摄像头

通过navigator.mediaDevices.getUserMedia()

useCamera(){

this.videoShow = true

this.pictureShow = false

this.cameraOptions()

},

cameraOptions(){

let constraints = {

video: {

width: 400,

height: 400

}

}

// 如果不是通过loacalhost或者通过https访问会将报错捕获并提示

try{

let promise = navigator.mediaDevices.getUserMedia(constraints);

promise.then((MediaStream) => {

// 返回参数

this.video.srcObject = MediaStream;

this.video.play();

this.recognizeFace()

}).catch((error) => {

console.log(error);

});

}catch(err){

this.httpsAlert = `您现在在使用非Https访问,

请先在chrome://flags/#unsafely-treat-insecure-origin-as-secure中修改配置,

添将当前链接${window.location.href}添加到列表,

并且将Insecure origins treated as secure修改为enabled,

修改完成后请重启浏览器后再次访问!`

}

}识别视频中的人像

这里通过递归的方式将视频中的内容用canvas显示

将canvas的节点传入到faceApi的方法中进行识别

通过faceApi返回的数组可以得到当前人脸的识别状况(数组长度0没有识别到人脸,长度1识别到一个人脸...以此类推)

async recognizeFace(){

if (this.video.paused) return clearTimeout(this.timeout);

this.canvas.getContext('2d', { willReadFrequently: true }).drawImage(this.video, 0, 0, 400, 400);

const results = await faceApi.detectAllFaces(this.canvas, this.options).withFaceLandmarks();

if(results.length === 0){

if(this.moreThanOne !== ''){

this.moreThanOne.close()

this.moreThanOne = ''

}

if(this.noOne === ''){

this.noOne = this.$message({

message: '未识别到人脸',

type: 'warning',

duration: 0

});

}

}else if(results.length > 1){

if(this.noOne !== ''){

this.noOne.close()

this.noOne = ''

}

if(this.moreThanOne === ''){

this.moreThanOne = this.$message({

message: '检测到镜头中有多个人',

type: 'warning',

duration: 0

});

}

}else{

if(this.noOne !== ''){

this.noOne.close()

this.noOne = ''

}

if(this.moreThanOne !== ''){

this.moreThanOne.close()

this.moreThanOne = ''

}

}

this.timeout = setTimeout(() => {

return this.recognizeFace()

});

},拍照上传

async photoShoot(){

// 拿到图片的base64

let canvas = this.canvas.toDataURL("image/png");

// 拍照以后将video隐藏

this.videoShow = false

this.pictureShow = true

// 停止摄像头成像

this.video.srcObject.getTracks()[0].stop()

this.video.pause()

if(canvas) {

// 拍照将base64转为file流文件

let blob = this.dataURLtoBlob(canvas);

let file = this.blobToFile(blob, "imgName");

// 将blob图片转化路径图片

let image = window.URL.createObjectURL(file)

this.picture = image

return

let formData = new FormData()

formData.append('file', this.picture)

axios({

method: 'post',

url: '/user/12345',

data: formData

}).then(res => {

console.log(res)

}).catch(err => {

console.log(err)

})

} else {

console.log('canvas生成失败')

}

},需要用到的图片格式转换方法

方法1:先将base64转为文件

方法2:设置新的文件中的参数信息

dataURLtoBlob(dataurl) {

let arr = dataurl.split(','),

mime = arr[0].match(/:(.*?);/)[1],

bstr = atob(arr[1]),

n = bstr.length,

u8arr = new Uint8Array(n);

while(n--) {

u8arr[n] = bstr.charCodeAt(n);

}

return new Blob([u8arr], {

type: mime

});

},

blobToFile(theBlob, fileName) {

theBlob.lastModifiedDate = new Date().toLocaleDateString();

theBlob.name = fileName;

return theBlob;

},完整代码

import bingImage from '@/assets/bbt1.jpg';

import BingWallpaper from '@/assets/BingWallpaper.jpg';

import * as faceApi from 'face-api.js'

export default {

name: 'Recognize',

data(){

return{

videoShow: false,

pictureShow: false,

// 图片地址

picture: '',

// 用于视频识别的节点

canvas: null,

video: null,

image: null,

timeout: 0,

// 模型识别的条件

options: '',

// 提示控制

noOne: '',

moreThanOne: '',

// 不是通过Https访问提示

httpsAlert: '',

}

},

mounted() {

// 初始化

this.init()

},

beforeDestroy() {

clearTimeout(this.timeout);

},

methods: {

async init() {

await faceApi.nets.ssdMobilenetv1.loadFromUri("/models");

await faceApi.loadFaceLandmarkModel("/models");

this.options = new faceApi.SsdMobilenetv1Options({

minConfidence: 0.5, // 0.1 ~ 0.9

});

// 视频中识别使用的节点

this.video = this.$refs.video

this.canvas = this.$refs.canvas

this.image = this.$refs.image

},

/**

* 使用视频来成像摄像头

*/

useCamera(){

this.videoShow = true

this.pictureShow = false

this.cameraOptions()

},

/**

* 使用摄像头

*/

cameraOptions(){

let constraints = {

video: {

width: 400,

height: 400

}

}

// 如果不是通过loacalhost或者通过https访问会将报错捕获并提示

try{

let promise = navigator.mediaDevices.getUserMedia(constraints);

promise.then((MediaStream) => {

// 返回参数

this.video.srcObject = MediaStream;

this.video.play();

this.recognizeFace()

}).catch((error) => {

console.log(error);

});

}catch(err){

this.httpsAlert = `您现在在使用非Https访问,

请先在chrome://flags/#unsafely-treat-insecure-origin-as-secure中修改配置,

添将当前链接${window.location.href}添加到列表,

并且将Insecure origins treated as secure修改为enabled,

修改完成后请重启浏览器后再次访问!`

}

},

/**

* 人脸识别方法

* 通过canvas节点识别

* 节点对象执行递归识别绘制

*/

async recognizeFace(){

if (this.video.paused) return clearTimeout(this.timeout);

this.canvas.getContext('2d', { willReadFrequently: true }).drawImage(this.video, 0, 0, 400, 400);

const results = await faceApi.detectAllFaces(this.canvas, this.options).withFaceLandmarks();

if(results.length === 0){

if(this.moreThanOne !== ''){

this.moreThanOne.close()

this.moreThanOne = ''

}

if(this.noOne === ''){

this.noOne = this.$message({

message: '未识别到人脸',

type: 'warning',

duration: 0

});

}

}else if(results.length > 1){

if(this.noOne !== ''){

this.noOne.close()

this.noOne = ''

}

if(this.moreThanOne === ''){

this.moreThanOne = this.$message({

message: '检测到镜头中有多个人',

type: 'warning',

duration: 0

});

}

}else{

if(this.noOne !== ''){

this.noOne.close()

this.noOne = ''

}

if(this.moreThanOne !== ''){

this.moreThanOne.close()

this.moreThanOne = ''

}

}

// 通过canvas显示video信息

this.timeout = setTimeout(() => {

return this.recognizeFace()

});

},

/**

* 拍照上传

*/

async photoShoot(){

// 拿到图片的base64

let canvas = this.canvas.toDataURL("image/png");

// 拍照以后将video隐藏

this.videoShow = false

this.pictureShow = true

// 停止摄像头成像

this.video.srcObject.getTracks()[0].stop()

this.video.pause()

if(canvas) {

// 拍照将base64转为file流文件

let blob = this.dataURLtoBlob(canvas);

console.log(blob)

let file = this.blobToFile(blob, "imgName");

console.info(file);

// 将blob图片转化路径图片

let image = window.URL.createObjectURL(file)

this.picture = image

// 将拍照后的图片发送给后端

let formData = new FormData()

formData.append('file', this.picture)

axios({

method: 'post',

url: '/user/12345',

data: formData

}).then(res => {

console.log(res)

}).catch(err => {

console.log(err)

})

} else {

console.log('canvas生成失败')

}

},

/**

* 将图片转为blob格式

* dataurl 拿到的base64的数据

*/

dataURLtoBlob(dataurl) {

let arr = dataurl.split(','),

mime = arr[0].match(/:(.*?);/)[1],

bstr = atob(arr[1]),

n = bstr.length,

u8arr = new Uint8Array(n);

while(n--) {

u8arr[n] = bstr.charCodeAt(n);

}

return new Blob([u8arr], {

type: mime

});

},

/**

* 生成文件信息

* theBlob 文件

* fileName 文件名字

*/

blobToFile(theBlob, fileName) {

theBlob.lastModifiedDate = new Date().toLocaleDateString();

theBlob.name = fileName;

return theBlob;

},

}

}